what system was used to develop the tactile writing of braille for the blind?

1. Introduction

Special teaching is the branch of education that attempts to minimize the obstacles faced by people with disabilities to enable learning through individualization. Using individualized educational activity, teachers and schools fit the curriculum and lesson plans for each student based on their disabilities [i,2]. Special education teachers atomic number 82 the industry in creative ways of education students [3,four] in a range of subjects, including mathematics, science, and mobility. In add-on, Globe Health Organization data indicates that almost two.ii billion people take vision harm, of which xi.5% (253 1000000 people) are severely visually impaired or blind (VIB), and virtually 24.5 million are students [v,half dozen]. Meantime, teachers of the visually impaired are often limited in their capability to constantly provide new educational activity materials to their students [7,8]. Although VIB students are similar to sighted people, in that an of import aspect of their lives is their educational activity, this is significantly afflicted by their loss of sight [ix]. This is most credible for types of information that are visual in nature such as shape, color, location, and position. Visual information is a vital tool in learning because numerous concepts tin can be explained more easily using visual aids. Although it is hard for VIB students to empathize visual concepts, it is not impossible [10]. Limited access is the master hurdle for the teachers of VIB students to teach these concepts. This inaccessibility is due to the express selection of educational materials, the difficulty in creating specialty materials, and the lack of teacher grooming in the utilize of technology or new techniques for pedagogy VIB students [11,12,13].

Although educational materials are available in VIB schools, these cannot be customized for the student's preferences, thus preventing the individualization of special education. An important aspect that has been considered is alluring the interest of the students during personalized education. In a study by Reber et al., the students were introduced to different personalized styles of education: contextual personalization, choice, and active personalization. In their research, information technology was found that the interest in the lesson of the students in the grouping taught using individual personalization was increased past solving additional problems from the textbook. This connected the learning fabric to the personal aspects of their lives. It was likewise found that, if the students were given a selection to pick between two tasks, their involvement was increased, perchance due to the increased autonomy. The situational interest was targeted by the pick of the style of instruction. Finally, the aspect of agile personalization increased the involvement of their students by involving them in the creation of the learning materials, which enabled them to connect this to their preferred topics, aspirations, and interests. Information technology was found that this method had more consequence on depression-confidence students, and increased reengagement and retention of their learning. The situational involvement effects were observed in the curt term, and the individual interest in learning was promoted in the long term [xiv]. Subsequently, as we experienced immediate at the Taichung Special Teaching School for the Visually Impaired, teachers of the VIB students have fabricated significant advances in terms of creativity to teach concepts. As an case, a instructor constructed an enlarged model of the shoelaces on sneakers, as shown in Figure 1. This implementation of the teachers has highlighted the creativity needed to teach basic concepts to their VIB students. However, these teachers have limited skills and preparation on using modernistic technologies, such equally 3D printing and 3D modeling. In research conducted by Chen et al. [9], such technologies were shown to improve the methods of teachers.

It has been found that graphical information is a crucial attribute in the fields of science and mathematics. As a result, VIB students and workers are usually presented with less data compared to the general population because of the lack of graphics in their organisation. In general, information technology is essential to employ graphics to portray unlike aspects of science and its applications. Gupta et al. stated that graphical information would increment the career opportunities for VIB students [15,16]. The use of a training system to read and empathize tactile graphics is a central pace for VIB students in standing and adapting the different complex tactile graphics for their future studies.

In this inquiry, we adult a system chosen TacPic, in which tactile educational materials (TEM) are generated without the boring and difficult process of making them by hand or 3D modeling. Currently, our system can be used to develop tactile flashcards, tactile maps, and tactile peg puzzles, which combine interactive tactile graphics and braille captions. The materials developed can exist used as tools for training and learning shapes, geography, and other concepts. Our work is aimed towards the comeback of the development of educational materials, not only for VIB students, but potentially too for normal-sighted students.

2. Materials and Methods

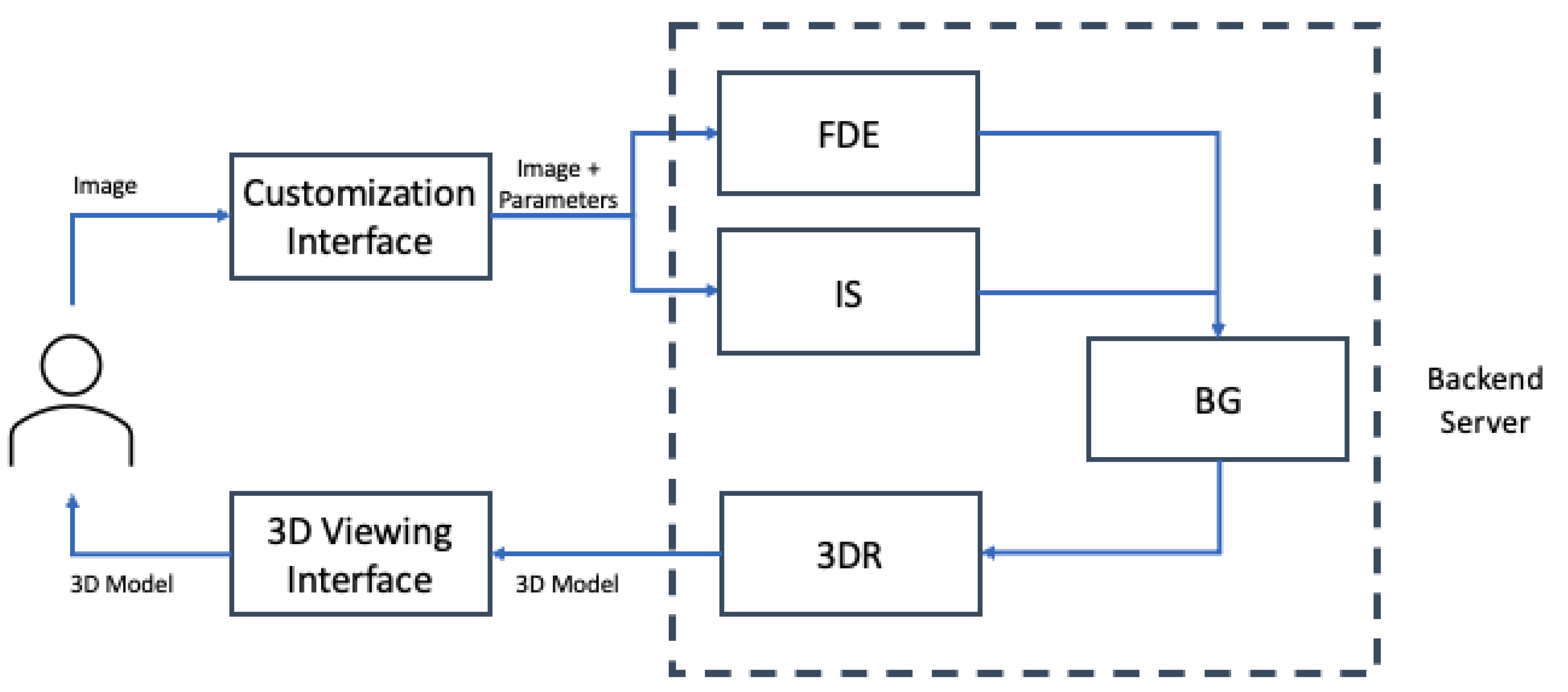

The TacPic system is built using a modular system approach, and passes objects and images onto different modules for each process. Each module within our organisation conducts a specific job and outputs its result for the next module to use. These modules are besides constructed from different programming languages and computing platforms that are integrated with each other. These modules are responsible for the post-obit tasks: Customization Interface, Feature Detection and Extraction (FDE), Example Segmentation (IS), Braille Generation (BG), 3D Reconstruction (3DR), and 3D Viewing Interface. FDE, IS, BG, and 3DR are included within the backend server. The system infrastructure is shown in Figure 2.

The catamenia of the organization is similar for all tactile materials, just the algorithms and models used for the backend are dissimilar for each type. We propose using this system compages to develop tactile flashcards, tactile maps, and tactile peg puzzles. Tactile flashcards are generated by creating shapes of the objects and placing a braille caption on height of them every bit an identifier of the object. These can be used for preparation to understand shapes and their corresponding names. Geography tactile maps are generated by creating the different cities and segments of a map and labelling the cities within them. These can be used in geography lessons so that the VIB students can have an agreement of the location and geometry of the places within a state. They can also be used to create topography maps to understand the terrain of a certain location. Tactile peg puzzles are generated by segmenting an epitome and creating a base in which the segmented image can fit. These can be used to mix and match the object with the characterization based on its shape.

two.1. Deployment of the TacPic System

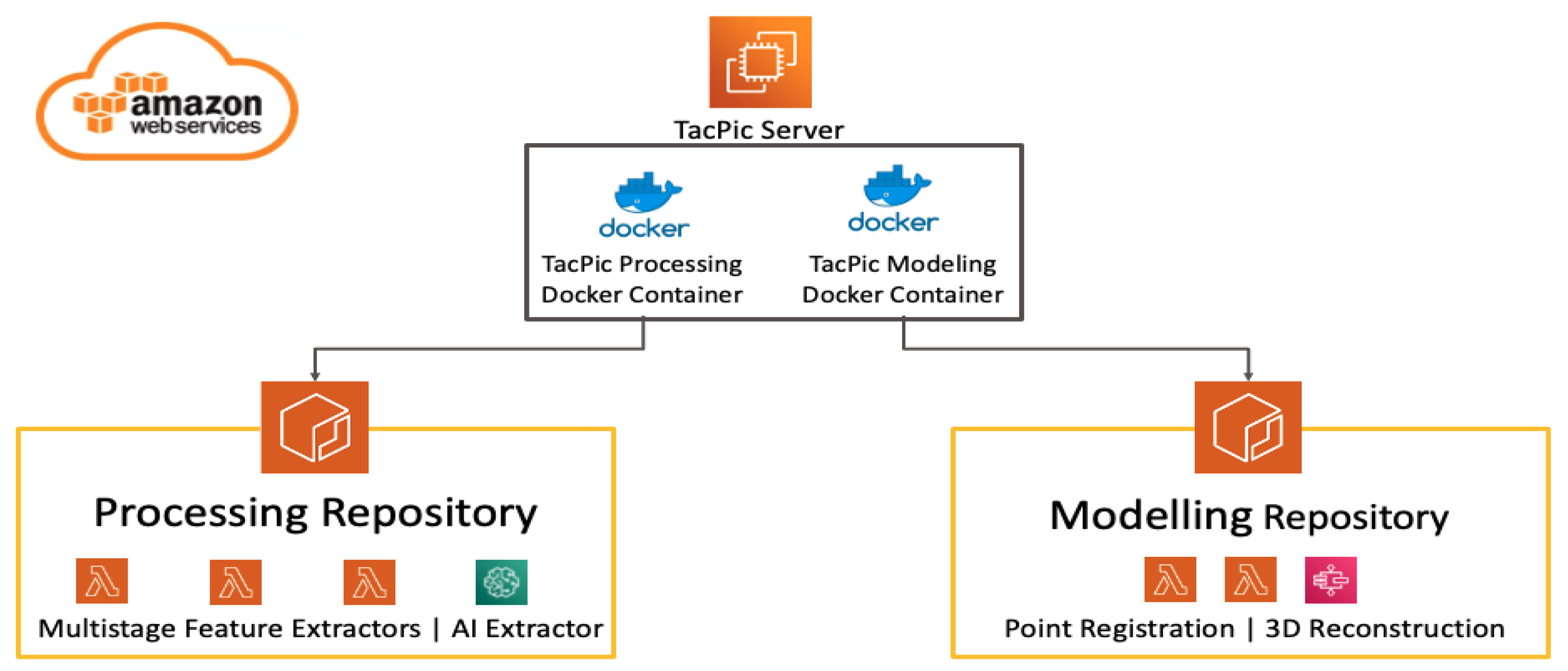

The TacPic system leverages the technologies of AI cloud calculating to create TEM for VIB instruction. AI is deployed to provide a lightweight implementation that teachers and parents of the visually impaired students can hands apply on their devices without needing expensive hardware, preparation on using bogus intelligence and 3D reconstruction, 3D modelling, or 3D printing. This is deployed on a spider web interface with an Amazon Web Service (AWS) backend. By leveraging the scalability of the deployment on the AWS servers, nosotros were able to allocate the appropriate amount of processing power to each step of the evolution pipeline.

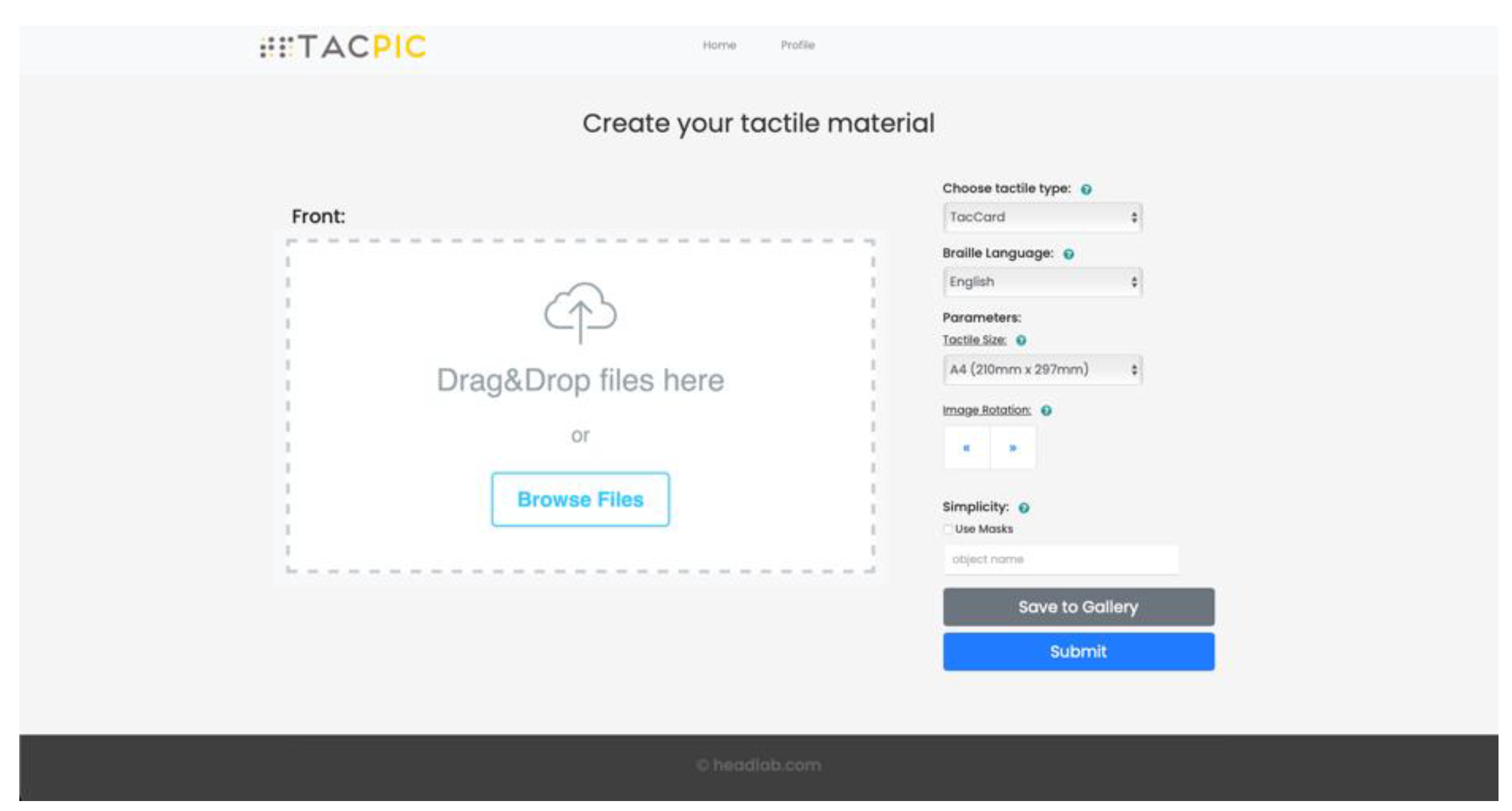

The organization infrastructure is shown in Figure 3. The user offset inputs an image through the web interface and sets the unlike parameters, such as the type of tactile material, the material size, and rotations. And so, when the user presses the "Submit" button, the web interface sends the image and its parameters to the AWS backend and starts the process. The succeeding spider web interface fetches the STL file from the output S3 saucepan and displays the resulting TEM for inspection past the user. The TEM cosmos process is equally follows:

-

The user uploads an prototype to the customization interface and sets the parameters for the image type, size, etc.

-

The paradigm is stored in a user-specific S3 saucepan sectionalisation with its specific parameters.

-

This issue triggers a Lambda role that passes the prototype to two Lambda functions of masking and pre-processing. After both processes are completed, they each shop their resulting outputs in another S3 bucket.

-

Next, some other Lambda office extracts the needed information label from the previous step.

-

So, the image from the previous step is processed by the TacPic Modeling Lambda function to create the 3D models of the TEM earlier combining the braille words and images. Finally, the 3D STL file from this step is stored in the output S3 bucket.

-

The 3D viewer interface collects the 3D STL file from the S3 saucepan and displays an interactive 3D model that tin can be inspected.

The development of the system was undertaken in a Python environment. The algorithms used were based on the Python implementation within multiple libraries, namely, TensorFlow, OpenCV, and NumPy. The TensorFlow library was used to perform the object detection and example division on images. The OpenCV library was used to perform the epitome processing algorithms. Finally, the NumPy library was used for prototype registration and 3D STL generation. This implementation was applied in the AWS platform for ease of integration with different features. It is benign if the algorithm is deployed within a web-based application or a mobile application. Subsequently, the AWS Elastic Container Registry (ECR) was used to shop the different algorithms. The algorithms were stored using a Docker container and connected to the AWS Lambda service to enable the container to be run remotely without a dedicated server. Different AWS S3 buckets were used as the storage for the images and STL files used during the testing. The whole procedure within the AWS platform is shown in Figure 3.

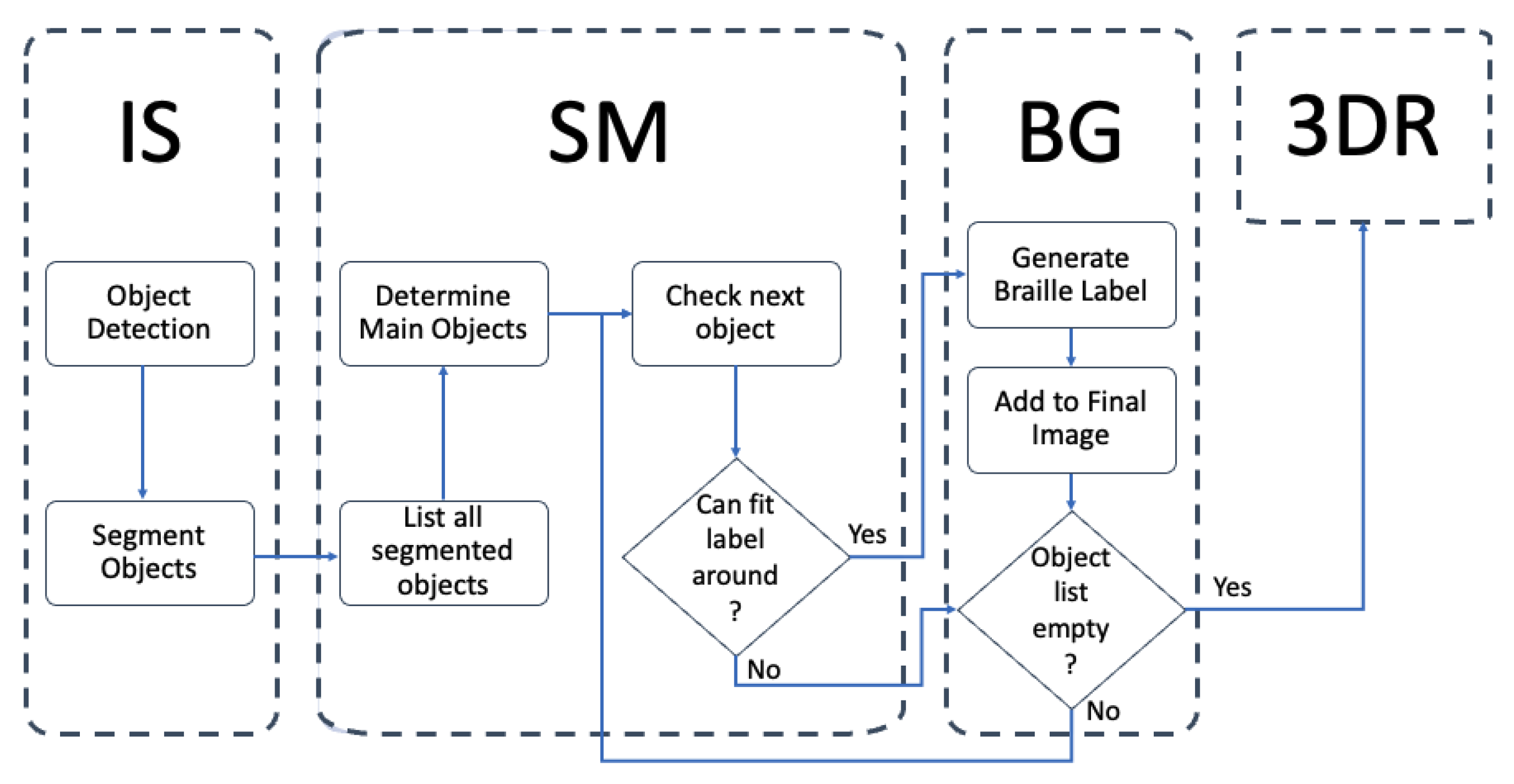

two.2. Selective Model for Object Masks

The guidelines for creating the tactile graphics used the concept of "less is more." It was considered that it would help users to sympathise if they were provided with less information on the tactile graphic and the focus was placed on its of import features. Because the haptic sense is not sensitive or accurate in terms of minute details, we developed a segmentation method that reduces the number of complexities within a tactile image while preserving the appropriate data. We proposed the use of a selective model (SM) built on peak of the instance sectionalisation method. Depending on the type of tactile material and the spread of the chief objects within the image, we express the selection to between 1 and 3 subjects within the image, even if more were detected. The algorithm is shown in Effigy 4.

All of the information regarding the detected objects, and their masks, labels, and locations, are listed then analyzed within the selective model. Different features of the masked objects are used to make up one's mind the chief objects inside an image. These objects are the combination of focus level, size of the object, and placement within the prototype. Objects with higher scores are given priority within the model. To determine the focus level of an object, the Canny edge detection algorithm is used, and the effect is analyzed based on the number of detected edges. Then, the score for the size of an object is based on the total number of pixels of each object mask. Finally, the score for the location of the prototype is based on its horizontal and vertical placement relative to the center lines. The last score is computed with:

where SS is the final score for the priority, f is the characteristic (focus, size, location), s is the feature score, ω is the weight for the feature, p is the pixels, and o is the object. This score is then ranked based on the last score to determine which objects are above the score threshold and which is the beginning to exist checked. For each master object, the braille characterization is checked to determine if it interferes with some other object or the boundary of the graphic, and if the object is used for the material. Once all data is checked, information technology is passed to the 3D reconstruction algorithm.

ii.three. 3D Reconstruction and 3D Printing

Subsequently the prototype and braille label are candy, a 3D point deject registration algorithm is practical to place the objects into a unified coordinate system. Each bespeak is arranged based on its pixel location, pixel identifier, and pixel value. The pixel location data determines where the pixel is located on the ten, y grid. This is important for easier meshing and arrangement of the voxels. The pixel identifier determines if it is part of the object or just the background. From the output of the selective model, only the objects that are within the threshold and are selected are generated from the terminal image. The pixels that are part of the background remain neutral for most of the process and serve as the base of operations of the educational materials. The details within the images are dictated by the pixel value. Moreover, the pixel value determines the z value of the pixels. This aspect is also afflicted when adding texture or details to an image. When combined, these aspects register the points into a singular 3D signal cloud space and can be used for a meshing method.

Finally, the signal cloud of the different parts of the TEM are combined. These are and so passed to a mesh generation algorithm to create the STL file. The point generation of all types of tactile photographs in this system fixes a 3D betoken array cube, which is represented by a set of values, due northr = [nr0 northwardr1 nr2 … due northrn], nc = [nc0 nc1 nc2 … due northcn], and nd = [nd0 northwardd1 due northd2 … ndn] that are arranged in a two-dimensional array depicting their positions based on the values. Each value in the array corresponds to a specific point in the iii-dimensional coordinate arrangement in terms of their x, y, z coordinates. Optimal triangulation is achieved to enable the mesh generation of the coordinate points [17]. The STL mesh is generated from the ten, y, z points in the R3 prepare in the prototype's globe coordinates, where ten represents the horizontal position, y represents the vertical position, and z represents the elevation position. These points are dictated by the previous paradigm processing algorithms. The triangulation is performed to enable the representation of the complex geometry of surfaces, in this example the tactile photographs, as a uncomplicated geometry for easier interpolation of the numerical values. The optimal triangulation used was Delaunay triangulation, which is used for the efficient ciphering of the meshes [18]. These meshes are used to generate the STL file of the tactile photographs.

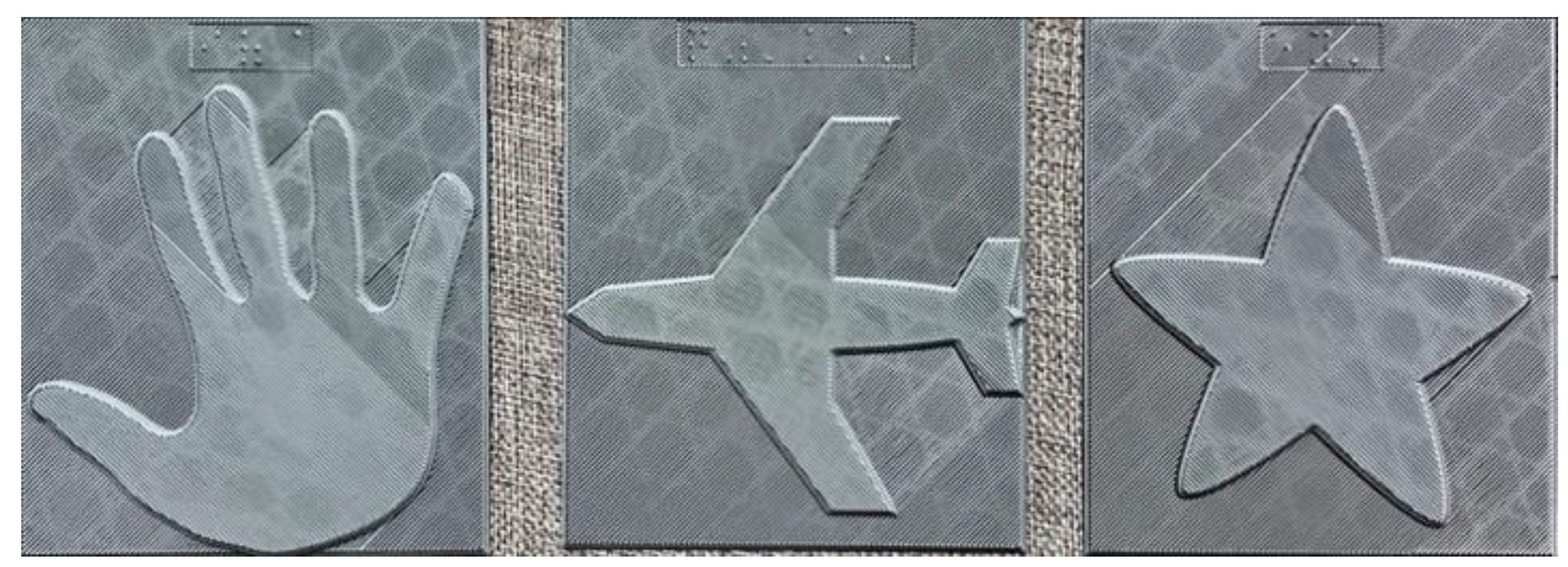

The materials generated through our algorithm were printed using a conventional extruder-based 3D printer. Different tactile materials were printed for evaluation. The materials were given a consistent size of 100 by 115 mm for the minor materials and 148 by 210 mm for the big materials. Nosotros used the PING DUAL 300 D300 (Taiwan) with SpiderMaker 3D Matte PLA filaments using consequent printing parameters: layer height = 0.2 mm; wall thickness = i.2 mm; infill density = 10%; infill design = Trihexagonal; printing temperature = 210 °C; build plate temperature = threescore °C; retraction speed = 60 mm/s; impress speed = forty mm/southward. These parameters were found to be the virtually compatible with the filament, the 3D printer, and the models being printed.

2.4. Tactile Educational Materials Generated

For the tactile flashcards, we developed a format in which braille captions are generated on height of the tactile graphic based on the object label determined by our object detection model. This method uses our selective model to make up one's mind 1 master object within the image and but uses that object for the braille characterization. This removes the need to check for the fitting of the braille label effectually the epitome. Figure 5 shows some of the tactile flashcards.

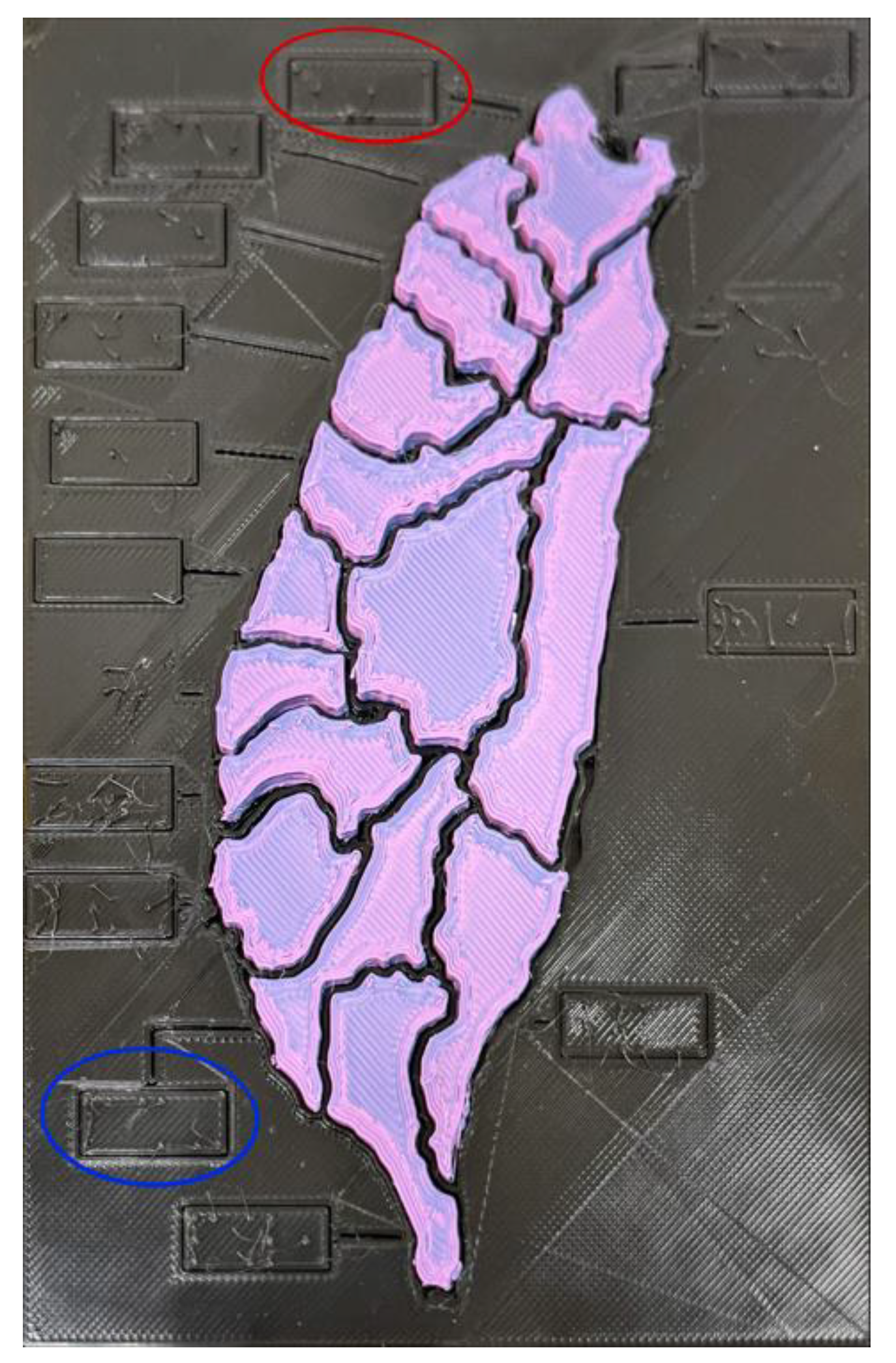

For the tactile maps, a blob detector is used to segment the image based on the interconnected pixels with the same intensity. Each hulk can then be labelled past the user. A strip packing algorithm is used to space the labels around the images and a connectedness line is created from the blob to the label. This algorithm is optimized past minimizing the distance from the blob to its corresponding label while having no overlaps with blobs and labels [nineteen]. Each blob is generated as 1 office of the material. A sample of a tactile map is shown in Figure 6 with purple parts and a blackness base of operations.

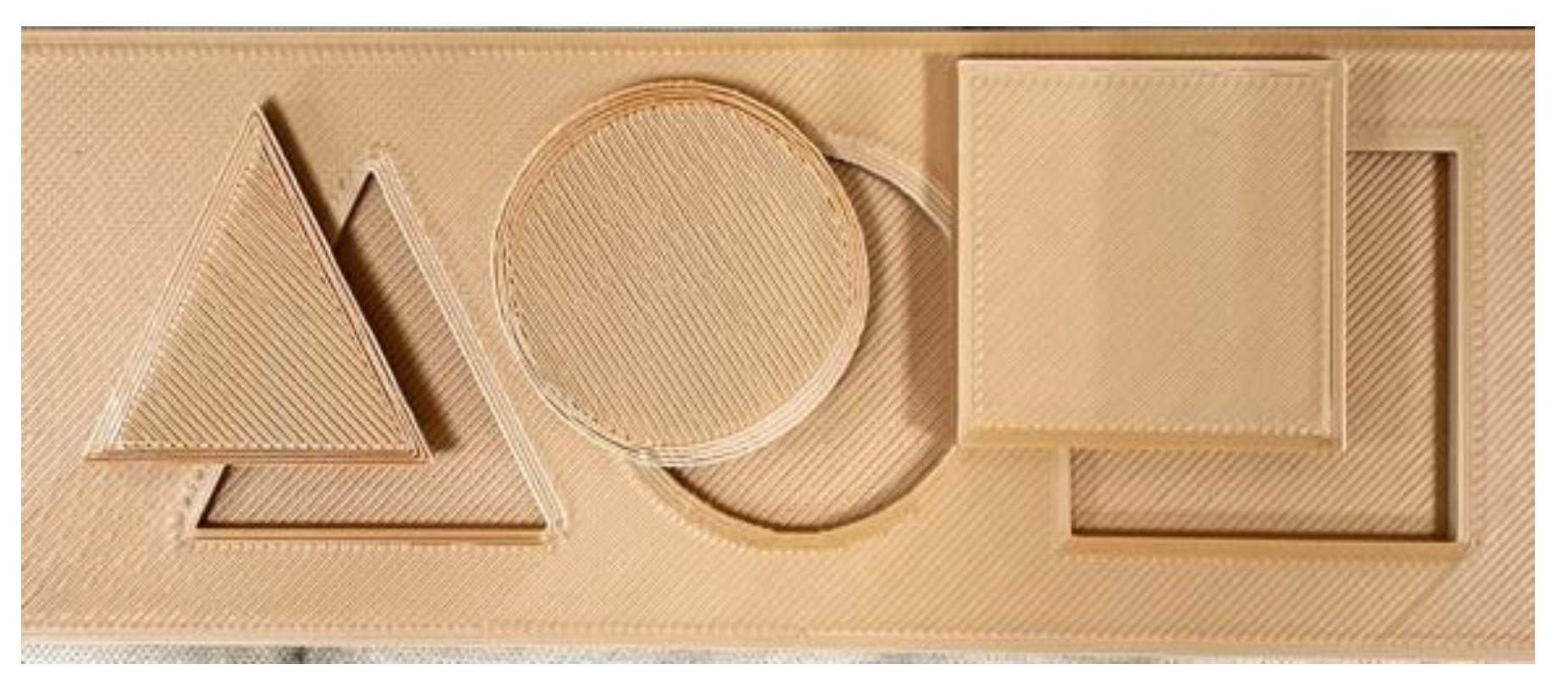

For the tactile peg puzzle, two parts were created: the base of operations plate with braille labels and the object parts. The models were optimized to be able to fit the object parts onto the base of operations plate. To determine the objects to exist placed in the material, the selective algorithm is used to fit a maximum of 3 objects without interfering with each other. A sample of the tactile peg puzzle is shown in Figure 7.

ii.five. Testing Methodology and Demographics

For organisation evaluation purposes, the parameters for the serverless platform, AWS Lambda, are shown in Table 1. To achieve a consistent outcome, each Lambda function was implemented using the same parameter values. The Python iii.viii development language was used with allocated retentiveness of 10,240 MB.

Initially, we met with VIB teachers from the Tainan City Life Rehabilitation Center for the Visually Impaired to let them feel the current TEM. This subjective evaluation compared the dissimilar methods of the tactile materials and the level of simplicity within the image. We provided the teachers with xx types of images, and they commented on the aspect that was the easiest to understand. A summary of the demographics of the teachers that tested the tactile flashcards is shown in Table two. One teacher was blind from nascence and the other adult blindness at historic period 6.

Next, we visited the Taichung Special Education School for the Visually Dumb, wherein six VIB students were given the educational aids to trial and provide comments. Their ages are betwixt 13 and twenty years. Of the 6 students, 4 were VIB from nascence, whereas two adult visual impairment; i is fully blind, 4 have light perception, and one has vision in one eye. Five of the students are able to read both English and Chinese Braille, although some are meliorate able to apply Chinese Braille, and 1 educatee uses books with big characters to read from one eye. A summary of the demographics of the students is found in Table 3. After the evaluation, the students were asked about their thoughts on the tactile materials in private interviews.

The usability exam for the generation website was conducted with the counselling teachers. Their chore was to generate a tactile material using a 2nd image with the TacPic website. They were so interviewed about their experience and asked for comments on the arrangement. Based on the ISO/IEC 25010:2011 Systems and software technology—Systems and software Quality Requirements and Evaluation (Foursquare) quality models, the usability of a organization tin be adamant based on the post-obit sub-characteristics: ceremoniousness, recognizability, learnability, operability, user mistake protection, user interface aesthetics, and accessibility. Regarding the aspect of usability evaluation, the most widely used method is the empirical method, consisting of either questionnaires or usability testing which has the reward that the feedback of the users is direct provided.

iii. Results

The main contribution of this written report was the use of a selective method algorithm alongside an example segmentation algorithm to reduce the unnecessary data within an epitome while retaining the important information. Furthermore, we introduced the use of avant-garde technologies for the education of the visually impaired students, and an understandable interface and so information technology can exist easily used by users who are not experts.

3.1. Evaluation of Selective Model

Our model is based on the Mask RCNN model trained using the common objects with context (COCO) dataset with a courage of ResNet-l. This model is based on the matterport implementation of Mask RCNN [20]. In our testing, nosotros detected all of the objects within an image, then used the selective model to remove objects that were not the main object. Effigy 8 demonstrates a selective model using a sample image. As shown in Table 4, an evaluation of the organisation was performed by the researchers using images of single or multiple objects in unique cases. This was able to detect the master object, simply experienced some failures when faced with objects that were close to the master object. For the first seven cases, the testing was undertaken for the detection of only one object, whereas, for the remaining cases, information technology was undertaken for the detection of up to three main objects.

iii.two. Evaluation of Algorithm Speed on AWS Platform

The AWS CloudWatch Logs provided an accurate summary of the runtime of the different processes. We used this to quantify the speed of the different steps within the process. Table 5 summarizes the processing time for each stride for two unlike sizes. The bigger image size generally took a longer fourth dimension to process beyond the different processes. The first steps of pre-processing and sectionalization were undertaken simultaneously, so the longer process was considered regarding the total and the shorter procedure was able to be ignored. As a upshot, the total generation fourth dimension required for an image to become a TEM was inside a few hours. To exist compatible, the sizes of the materials used in testing were 100 past 115 mm for the small materials, and 148 by 210 mm for the large materials. The results show that the algorithm proposed by the system needs only about ii min and 48.149 s (small material sample), and iv min and 25.28 s (large material sample), to generate a TEM. In addition, information technology would take about 2 h and 47 min to print a small-scale material sample, whereas large materials can be printed in around 6 h and 37 min. This is an improvement over the current methods, which normally take from 2 weeks to several months to consummate, depending on the complexity of the material.

3.3. Airplane pilot Evaluation from Teachers of the Blind

The tactile graphics nosotros generated for the teachers who were bullheaded comprised ii levels; one was a lithophane-based tactile graphic, and the other was a shape-embossed version that was generated using our method. Based on our trials of thirty tactile graphics, the teachers preferred the simplest version of the tactile graphic, with which they were able to place the objects within the TEM. We noted this was because different elements within the lithophane-based tactile graphic were unnecessary and unreadable using the tactile sense. Our results showed the graphics were more easily understood by the teacher who was not congenitally bullheaded. The teacher noted that this was due to their previous groundwork of learning shapes prior to developing blindness. Tabular array 6 summarizes the testing of the flashcards with the teachers.

3.iv. Evaluation of Tactile Materials

The images used for the generation of TEM were different common objects and words that can be learned by children. For the tactile flashcards, different simple shapes, such as a moon, triangle, foursquare, circle, star, mitt, cloud, coffee, machine, and heart, were used to ensure they would be easy to understand. For the tactile maps, the map of Taiwan was used to ensure that information technology would exist relatable to the user. For the tactile peg puzzles, different fundamental shapes, such as a circle, square, and triangle, were used. The TEM were faux and generated at a uniform size and aspect ratio, only with differences in each of the types of materials. Their teachers supervised the evaluation and guided the students on the processes and the graphics that they did non understand.

The tactile materials generated past our system were tested by different VIB students to determine if the illustrations on the flashcards were identifiable. Tests for the peg puzzles and map were evaluated in terms of the student's completion. The students were not trained in how to use the tactile educational materials prior to testing. In the first examination, each of the six VIB students was given a set of 10 flashcards and instructed to utilise the embedded shape to try to place each object within the set. Table 7 summarizes the results, and shows that about of the students were able to identify the graphics, and that some used Braille. Most of the failed identifications were the cloud and car objects, which are relatively complex.

For the tactile maps that were gear up-upward similar peg puzzles, the users experienced a slight difficulty in piecing together the Taiwan map. Considering the map had a circuitous shape, the users were initially confused, but were somewhen able to place the pieces in their correct slot. With the map base, they were able to locate each Taiwan urban center using the contracted names of the city (bei for Taipei City, gao for Kaohsiung City, etc.) and as well relative to each other. They found that this was an interesting tool to sympathize geography and the location of each city relative to the others. Images of students using the tactile materials are shown in Figure 9.

3.5. Evaluation of TacPic Website

The website we developed is shown in Figure 10. The website was divided into two sections: the prototype upload and the setting pick. The website was designed for simplicity and then that any user, fifty-fifty those who are untrained in tactile materials, would be able to develop TEM. The teachers noted that the students take different abilities, and that it is crucial to pattern the learning material according to the ability of each student and to undertake farther testing to provide a suitable material. Thus, information technology was crucial that the website enabled the user to hands customize the materials. After testing the website and successfully generating TEM, they plant that the concept of easy generation of tactile graphics was helpful for them. They were able to hands develop the tactile materials using 2D images while customizing the different parameters of the generated TEM. The teachers stated that the website was straightforward and easily understood upon initial use. Thus, the teachers could easily become familiar with the organisation.

4. Discussion

Our selective model algorithm can easily place the principal objects within an image, and ignore the other detected objects to reduce the information. This helps the understandability of the tactile educational materials for visually dumb students. During our pilot testing with VIB teachers, we compared the lithophane method and our method of evolution of tactile graphics. It was observed that the teachers preferred simpler graphics than complex ones. The students and teachers likewise commented that near tactile materials are difficult to acquire, particularly in Taiwan where it is rarely manufactured.

During the evaluation of the tactile materials, the shapes were immediately recognized but the users noted that the Braille labels had rectangles that were distracting. In improver to the removal of rectangles for the labels, we volition round the corners of the tactile materials to reduce the hazards to the users. Regarding the tactile maps, the users had difficulty in recognizing the complex shapes of the cities but could read the Braille. Equally with whatsoever new platform, a learning bend is associated with the understanding of the shapes and objects being portrayed. People with prior knowledge regarding shapes and objects have an advantage compared to people without prior knowledge about the shapes [21]. We observed that the students and teachers who previously had vision were faster and more than accurate at identifying the shapes. By comparison, although others were able to understand some of the shapes, they were slower. Based on our testing, there appeared to be a correlation in which the students with prior knowledge of the shapes were able to recognize them more quickly than the other students who had no sight since nativity. The purpose of the tactile materials in our study was for training, so information technology is expected that the students will not be able to understand all of the shapes immediately [9]. In our test results, in that location were only a few cases in which students did non understand the illustrations. With hereafter grooming and more testing, the users will potentially be meliorate able to understand and utilize these shapes. In improver to the students, the teachers were instrumental members of our evaluation, and provided meaningful insights into our development of the generation arrangement and the tactile materials. The most notable annotate they provided was that the system would be significantly improved if it was able to provide a universal design that tin exist used past both the sighted and the visually impaired students, thus making the materials more inclusive and enjoyable for all students. The ease of understandability and ease of apply are extremely important for enhancing the generation of TEM by teachers and encouraging them to use it more.

The teachers found that the concept will help ease their burden in creating tactile materials, and that the website was easy to understand and easy to use. From the conducted interviews, we summarize the different themes and corresponding quotes of the teachers that reflected their experience and thoughts on using the TacPic system in Table 8.

v. Limitations

The range of objects that our method tin detect using our selective model is currently limited by the model used for instance segmentation. This tin be expanded by grooming the model further. Another possible limitation is the limit placed on the length of Braille words in the TEM generation; still, in our electric current model, no words exceed the limit for Braille length. This can exist addressed in a future piece of work. In the evaluation of the tactile materials and the TacPic organisation, the number of participants were limited due to the restrictions imposed in response to the COVID-19 outbreak in Taiwan. Further testing of the system is needed for amend representation of the full general population. In addition, the teachers commented that information technology would be beneficial if the website also had a Chinese version for their employ. The system was intended to be used past the teachers of the VIB students to create TEM to engage their students and encourage them to study.

6. Conclusions

We successfully developed a platform that that allows users to quickly develop personalized and individualized tactile learning materials without prior experience in tactile photo development or 3D printing. This platform can exist used to create tactile educational materials, such as tactile flashcards, tactile maps, and tactile peg puzzles. These materials tin can be used in shape perception and object identification with the use of touch on to improve the current learning platforms. The proposed method provides the teachers and parents of VIB students a robust ways of more than quickly creating dissimilar types of TEMs than traditional methods, by taking reward of the rapid prototyping method of 3D printing. This tin be undertaken with minimal human interaction and no prior experience in 3D press.

Although the algorithm enables the robust construction of TEM, its general variable formatting allows for the use of other sources, such black and white and RGB images, and the use of other preprocessing filters, which can alter the fashion and fit the context and information being displayed. Therefore, the organisation is non limited to only three types of TEM. Rather, it acts equally a baseplate for the different types and styles available to the user. This proposed platform has the capability of being expanded to a unlike variety of data sources, such every bit depth [22], LiDAR [23], radar [24], or stereo imaging [25]. Currently, we are using 3D printing as the means for rapid prototyping of the adult tactile photographs. Even so, we are also examining other types of generation surface haptics, which may be developed using CNC milling, and virtual haptic interfaces [26,27,28]. A GCode generation algorithm may exist added to the system to further reduce the need for human interaction within the procedure from the input to the output.

A future expanse of research that can be integrated into this study is the concept of color theory for tactile systems. Patterns tin can be used to correspond to unlike colors or shapes to provide more information to the VIB students, such every bit that shown in [29]. Later, further optimization of the algorithms to improve segmentation, and the inclusion of other features such as colour and texture, tin can exist undertaken. The teachers of the visually impaired students can continually generate tactile graphics using our platform, and provide feedback regarding the new features that we introduce into the system. Finally, new materials and methods in printing are needed to hasten the printing process and enable more efficient mass production of the tactile educational materials.

7. Patents

This work currently has a patent application under review in the Republic of China, Taiwan.

Author Contributions

Conceptualization, A.R.S.; methodology, A.R.Due south. and W.D.A.; software, W.D.A.; validation, Westward.D.A. and A.R.S.; formal analysis, A.R.S., W.D.A.; investigation, West.D.A.; data curation, W.D.A.; writing—original draft training, A.R.Due south., West.D.A.; writing—review and editing, A.R.S.; supervision, A.R.Southward.; project administration, A.R.South.; funding acquisition, A.R.South. All authors accept read and agreed to the published version of the manuscript.

Funding

This piece of work was supported in function past the Ministry of Science and Engineering science (MOST), Taiwan, under Grant MOST 109-2222-E-218-001–MY2 and Ministry of Teaching, Taiwan, under grant MOE 1300-108P097.

Institutional Review Board Statement

Non applicative.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the written report.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the National Taichung Special Education Schoolhouse for the Visually Impaired, Resources for the Blind in the Philippines, Tainan Urban center Life Rehabilitation Center for the Visually Dumb, and Tainan City You Ming Visually Dumb Clan, who provided us with insights and inspiration for the projection.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lemons, C.J.; Vaughn, Due south.; Wexler, J.; Kearns, D.G.; Sinclair, A.C. Envisioning an improved continuum of special education services for students with learning disabilities: Considering intervention intensity. Learn. Disabil. Res. Pract. 2018, 33, 131–143. [Google Scholar] [CrossRef]

- Kirjavainen, T.; Pulkkinen, J.; Jahnukainen, M. Special education students in transition to further educational activity: A four-twelvemonth register-based follow-upwards written report in Finland. Learn. Individ. Differ. 2016, 45, 33–42. [Google Scholar] [CrossRef]

- Fernandes, P.R.D.S.; Jardim, J.; Lopes, M.C.D.S. The Soft Skills of Special Education Teachers: Testify from the Literature. Educ. Sci. 2021, eleven, 125. [Google Scholar] [CrossRef]

- Gucyeter, South.; Erdogan, South.C. Artistic children in a robust learning environment: Perceptions of special education teacher candidates. Recollect. Ski. Creat. 2020, 37, 100675. [Google Scholar] [CrossRef]

- Bourne, R.; Resnikoff, S.; Ackland, P. GBVI—Changes in Numbers & Prevalence. Bachelor online: http://atlas.iapb.org/global-burden-vision-impairment/gbvi-changes-numbers-prevalence/ (accessed on 30 September 2020).

- World Health Organization. Blindness and Vision Impairment. Available online: https://world wide web.who.int/news-room/fact-sheets/item/incomprehension-and-visual-impairment (accessed on thirty September 2020).

- Mejía, P.; Martini, L.C.; Grijalva, F.; Larco, J.C.; Rodríguez, J.C. A Survey on Mathematical Software Tools for Visually Impaired Persons: A Practical Perspective. IEEE Access 2021, 9, 66929–66947. [Google Scholar] [CrossRef]

- Wongkia, W.; Naruedomkul, One thousand.; Cercone, North. i-Math: Automatic math reader for Thai bullheaded and visually impaired students. Comput. Math. Appl. 2012, 64, 2128–2140. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Chang, P.-L. 3D printing assisted in art education: Study on the effectiveness of visually impaired students in space learning. In Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI), Tokyo, Japan, xiii–17 April 2018; pp. 803–806. [Google Scholar]

- Shahira, K.; Lijiya, A. Towards Assisting the Visually Impaired: A Review on Techniques for Decoding the Visual Data from Chart Images. IEEE Access 2021, nine, 52926–52943. [Google Scholar] [CrossRef]

- Flanagan, S.; Bouck, E.C.; Richardson, J. Center schoolhouse special education teachers' perceptions and utilise of assistive applied science in literacy instruction. Assist. Technol. 2013, 25, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Russo-Campisi, J. Bear witness-based practices in special education: Electric current assumptions and future considerations. In Child & Youth Intendance Forum; Springer: Berlin/Heidelberg, Germany, 2017; pp. 193–205. [Google Scholar]

- Riccomini, P.J.; Morano, Southward.; Hughes, C.A. Big ideas in special instruction: Peculiarly designed instruction, loftier-leverage practices, explicit education, and intensive didactics. Teach. Except. Child. 2017, 50, 20–27. [Google Scholar] [CrossRef]

- Reber, R.; Canning, E.A.; Harackiewicz, J.M. Personalized education to increase interest. Curr. Dir. Psychol. Sci. 2018, 27, 449–454. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Balakrishnan, 1000.; Rao, P. Tactile diagrams for the visually impaired. IEEE Potentials 2017, 36, 14–eighteen. [Google Scholar] [CrossRef]

- Bara, F.; Gentaz, E.; Valente, D. The outcome of illustrations on the reading process of tactile books: An exploratory study. J. Vis. Impair. Bullheaded. 2018, 112, hal-01889119. [Google Scholar]

- Bern, M.; Eppstein, D. Mesh generation and optimal triangulation. Comput. Euclidean Geom. 1992, 1, 23–ninety. [Google Scholar]

- Chen, Fifty.; Xu, J.-C. Optimal delaunay triangulations. J. Comput. Math. 2004, 22, 299–308. [Google Scholar]

- Hu, Y.; Fukatsu, S.; Hashimoto, H.; Imahori, S.; Yagiura, Grand. Efficient overlap detection and construction algorithms for the bitmap shape packing trouble. J. Oper. Res. Soc. Jpn. 2018, 61, 132–150. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italia, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Leo, F.; Cocchi, E.; Brayda, Fifty. The effect of programmable tactile displays on spatial learning skills in children and adolescents of different visual inability. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 861–872. [Google Scholar] [CrossRef] [PubMed]

- Hisatomi, Thousand.; Kano, M.; Ikeya, M.; Katayama, M.; Mishina, T.; Iwadate, Y.; Aizawa, Thou. Depth estimation using an infrared dot projector and an infrared color stereo photographic camera. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2086–2097. [Google Scholar] [CrossRef]

- Gong, X.; Lin, Y.; Liu, J. 3D LIDAR-camera extrinsic calibration using an capricious trihedron. Sensors 2013, 13, 1902–1918. [Google Scholar] [CrossRef] [PubMed]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, 1000. A deep learning-based radar and camera sensor fusion architecture for object detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 15–17 Oct 2019; pp. 1–7. [Google Scholar]

- Kazmi, Westward.; Foix, Southward.; Alenyà, G.; Andersen, H.J. Indoor and outdoor depth imaging of leaves with time-of-flight and stereo vision sensors: Analysis and comparison. ISPRS J. Photogramm. Remote Sens. 2014, 88, 128–146. [Google Scholar] [CrossRef]

- Celani, G.; Zattera, V.; de Oliveira, M.F.; da Silva, J.V.L. "Seeing" with the Easily: Education Architecture for the Visually-Dumb with Digitally-Fabricated Scale Models. In Proceedings of the International Conference on Figurer-Aided Architectural Pattern Futures, Berlin, Heidelberg, 3 July 2013; pp. 159–166. [Google Scholar]

- Carter, T.; Seah, S.A.; Long, B.; Drinkwater, B.; Subramanian, Southward. UltraHaptics: Multi-point mid-air haptic feedback for touch on surfaces. In Proceedings of the 26th Almanac ACM Symposium on User Interface Software and Technology, St. Andrews, Britain, viii–11 Oct 2013; pp. 505–514. [Google Scholar]

- Bargerhuff, M.Due east.; Cowan, H.; Oliveira, F.; Quek, F.; Fang, B. Haptic glove engineering: Skill development through video game play. J. Vis. Impair. Blind. 2010, 104, 688–699. [Google Scholar] [CrossRef]

- Shin, J.; Cho, J.; Lee, S. Tactile-Color Arrangement for Accessibility of Color Teaching: 2.5 D UV Printed Supplementary Material for Visually Dumb Students. In Proceedings of the 2021 15th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Korea, 4–6 January 2021; pp. 1–7. [Google Scholar]

Figure one. Educational materials made by a teacher (from the Taichung Special Education School for the Visually Dumb) for teaching students how to tie shoelaces.

Figure one. Educational materials made by a teacher (from the Taichung Special Education Schoolhouse for the Visually Impaired) for teaching students how to tie shoelaces.

Effigy 2. Modular organization infrastructure of the TacPic system.

Figure ii. Modular system infrastructure of the TacPic arrangement.

Figure 3. The processes implemented though cloud computing using the AWS functions.

Figure 3. The processes implemented though cloud calculating using the AWS functions.

Figure 4. Flow of the example partitioning with the integration of the selective model.

Figure four. Flow of the instance sectionalisation with the integration of the selective model.

Figure 5. Samples of the tactile flashcards generated and 3D printed: (from left to correct) paw, airplane, and star in English Braille.

Figure five. Samples of the tactile flashcards generated and 3D printed: (from left to right) hand, airplane, and star in English Braille.

Figure 6. Sample of a tactile map of Taiwan (where the contracted proper noun of each city is used (bei for Taipei City in carmine, gao for Kaohsiung City in bluish, etc.) generated, and 3D printed in Chinese Braille.

Figure vi. Sample of a tactile map of Taiwan (where the contracted name of each metropolis is used (bei for Taipei City in ruby-red, gao for Kaohsiung Metropolis in blueish, etc.) generated, and 3D printed in Chinese Braille.

Figure 7. Sample of the generated and 3D printed tactile peg puzzle.

Figure seven. Sample of the generated and 3D printed tactile peg puzzle.

Figure 8. Test sample of the result when passed to the (a) Mask R-CNN framework and the result when (b) calculation the selective model.

Effigy 8. Examination sample of the result when passed to the (a) Mask R-CNN framework and the result when (b) calculation the selective model.

Effigy nine. Students from the VIB schoolhouse testing the tactile educational materials.

Figure 9. Students from the VIB school testing the tactile educational materials.

Figure 10. TacPic website.

Figure 10. TacPic website.

Table ane. Software and hardware platform used for evolution.

Table ane. Software and hardware platform used for development.

| Parameter | Platform Used |

|---|---|

| Platform | AWS Lambda |

| Development Language | Python 3.8 |

| Framework | TensorFlow |

| Memory Allocation | 10,240 MB |

Table 2. Demographics of the teachers.

Table 2. Demographics of the teachers.

| Number | |

|---|---|

| Participants | 2 teachers |

| Perception | two fully blind |

| Acquired | ane congenital, 1 acquired |

| Braille | Both can read Chinese and English Braille |

Table 3. Demographics of the students.

Table iii. Demographics of the students.

| Number | |

|---|---|

| Participants | half dozen students |

| Perception | five partial blindness, 1 fully blind |

| Acquired | four congenital, 2 acquired |

| Braille | 5 can read Chinese and English language Braille; 1 read using books with big characters |

Tabular array 4. Operation of masking.

Table 4. Operation of masking.

| Examination Number | Set Main Object (south) | Predicted Master Object (s) | Removed Object (s) | Correct? |

|---|---|---|---|---|

| one | Dog 2 | Dog 2 | Canis familiaris one, Dog three | Yes |

| 2 | Person | Person | Ball | Yeah |

| iii | Hydrant | Canis familiaris | Hydrant | No |

| iv | Automobile one | Automobile ane | Stoplight | Yes |

| 5 | Giraffe | Giraffe | Zebra | Yes |

| 6 | Elephant | Elephant | Person one, Person 2 | Yes |

| vii | Couch | Couch | Constitute | Yep |

| 8 | Motorbike, Person | Motorbike, Person | Stoplight | Yep |

| nine | Motorcycle, Person | Motorcycle, Person | Establish | Aye |

| 10 | Cat, Dog, Rabbit | Cat, Domestic dog, Rabbit | Mouse | Yes |

| 11 | Cow, Equus caballus | Cow, Horse | Car, Person 1, Person 2, Person iii | Yes |

| 12 | Car ane, Car 2 | Auto 1, Car 2 | Automobile three | Yes |

| xiii | Person, Dissonance, Person | Person, Noise | Person, Ball | No |

| 14 | Person 3, Ball | Person 1, Person 3, Brawl, Brawl | Person 2 | No |

| 15 | Person four, Ball | Person 1, Person two, Person 3, Person 4, Ball | Person five | No |

Table five. Speed of the different processes.

Table five. Speed of the dissimilar processes.

| Processes | 100 by 115 mm (Average) | 148 past 210 mm (Boilerplate) |

|---|---|---|

| Pre-processing | 0.737 s | 1.214 s |

| Sectionalization | ane m 45.219 s | 1 grand 49.077 south |

| 3D Reconstruction | Base: 33.329 s Parts: 28.864 s | Base: 1 m 19.157 s Parts: ane m 15.835 southward |

| 3D Printing | Base: 2 h 7 m Parts: 38 1000 | Base of operations: 5 h 43 chiliad Parts: fifty m |

| Total | 2 h 47 1000 | half-dozen h 37 m |

Table 6. Testing with the tactile flashcards.

Table half dozen. Testing with the tactile flashcards.

| Instructor | Used Tactile Graphics (Correct) | Used Braille (Correct) | Failed |

|---|---|---|---|

| Acquired Blindness | twenty | 0 | 0 |

| Congenital Blindness | viii | eleven | 1 |

Table 7. Testing with the tactile flashcards.

Table 7. Testing with the tactile flashcards.

| Educatee Number | Used Tactile Graphics (Correct) | Used Braille (Correct) | Failed |

|---|---|---|---|

| i | 4 | vi | 0 |

| two | 8 | i | 1 |

| 3 | 9 | 0 | 1 |

| four | ix | 1 | 0 |

| 5 | Can encounter from ane eye | ||

| 6 | 6 | 3 | 1 |

Table 8. Summary of the themes based on the qualitative evaluation of the TacPic system and tactile materials.

Table viii. Summary of the themes based on the qualitative evaluation of the TacPic arrangement and tactile materials.

| Theme | Representative Quotes |

|---|---|

| Importance of Customizability | The most challenging task was that different students take unlike abilities, and nosotros should blueprint the instruction cloth accordingly; The teaching material should exist interesting to trigger their motivation. The teaching material should exist suitable for the level of each student where information technology should non be too easy or also hard for them. |

| Creative Solution | The TacPic system has a good concept that can be improved by employing a universal design that can develop learning materials for utilise by both normal and visually impaired students; The idea of the teaching material generation is good, I hope in the future when the organisation is mature nosotros can apply information technology to blueprint the materials according to the power of each student. |

| Easy to use | Just looking at the website already tells how information technology can exist used; The website looks unproblematic and easy to employ. Nosotros hope it tin also accept a Chinese version for teachers in Taiwan. |

| Publisher'southward Notation: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This commodity is an open access article distributed under the terms and atmospheric condition of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

soderstenblas1966.blogspot.com

Source: https://www.mdpi.com/2076-3417/11/16/7552/htm

0 Response to "what system was used to develop the tactile writing of braille for the blind?"

Post a Comment